a blog about unixy stuff and so on

2011/06/09More SSL/TLS/HTTPS benchmarks

In an attempt to dispel the myths about issues with https and performance, I've recently held a talk at optimera stockholm on the subject. This blogpost will describe how the benchmarks was done which hardware was used, and some details about the network setup.

Test setup

The test was conducted between a mac book pro, with a core 2 duo cpu running at 2.4Ghz. (client) and an quad intel cpu, running FreeBSD 8.2 (server). The network was a switched network at 100Mbit.

dmesg | grep -i cpu CPU: Intel(R) Core(TM)2 Quad CPU Q6600 @ 2.40GHz (2394.01-MHz K8-class CPU) FreeBSD/SMP: Multiprocessor System Detected: 4 CPUs

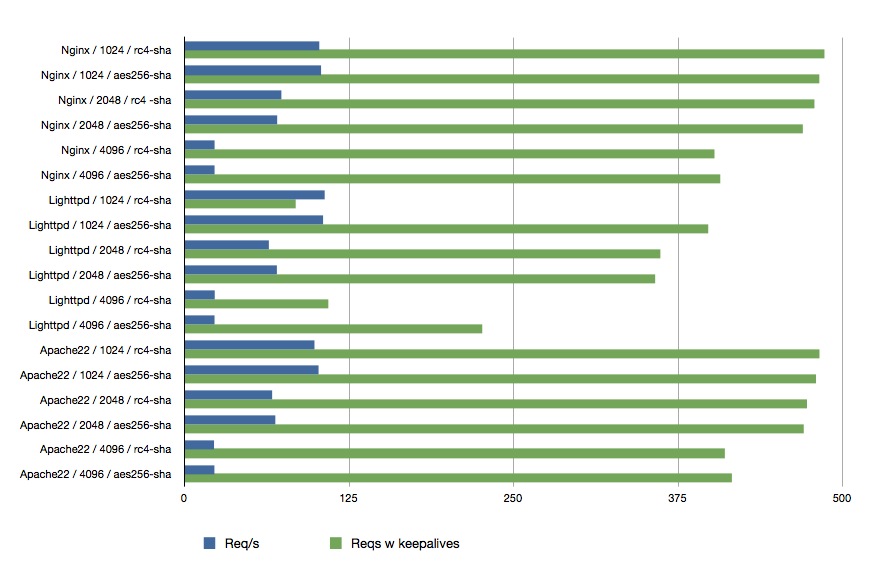

To perform the test, romab.com:s index.html was fetched 10000 times, both concurrently, in sequence, and with and without keep-alives. Three webservers was tested: apache22, lighttpd 1.4.27 and nginx 1.0.2. Each server was then tested with multiple settings, two ciphers was used - aes256 and rc4. In addition, 1024, 2048, and 4096 bit public key lenghts was used to see how the public key lenght impacted the performance.

The first test was to test sequencial connection from a client. Note that this does not really produce a realistic workload as a high load website will most likely have 100s of connection in parallell. Regardless, it gives us valuable insight in how well the servers perform

Now, that is interesting. We see that all webservers gains an massive performance increase when keep alives are enabled. We can also notice that ciphers doesn't impact performance that much, but the lenght of the public key sure does! Lighttpd did perform really bad here, and i actually redid the benchmarks, but no - still does not perform well. This might be due to my lack of knowledge on how to configure lighttpd, or that there is a bug with lighttpd on fbsd/ssl or simply that lighttpd does not perform verry well when dealing with https.

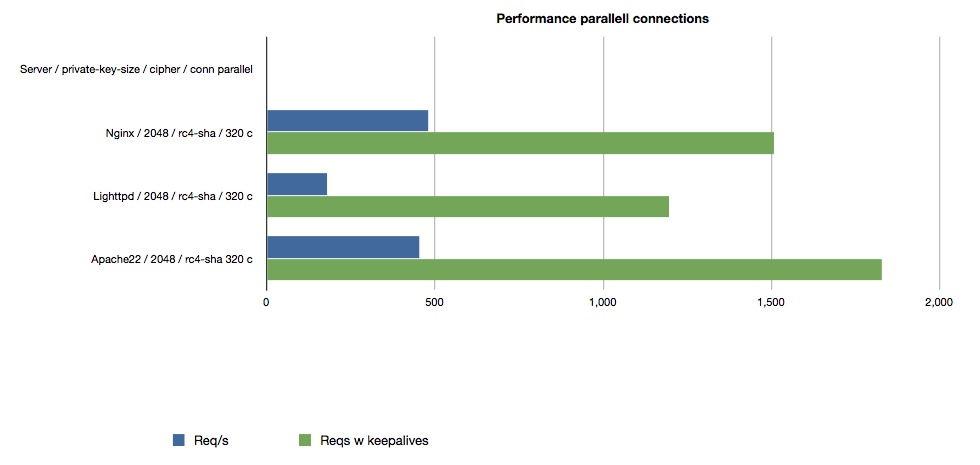

To gain a realistic workload we need to add massive amount of clients in parallell, and this is where we start seeing a difference on the servers; apache doesnt allow you to set maxclients above 256, and lighttpd stops giving reliable responses after 400 clients. Otoh, nginx just keeps on going. parallell clients used against nginx was 800, after that i stopped doing benchmarks. The picture bellow illustrates 320 parallell connections, the highest i could go before apache threw in the towel.

The software used to do the actual tests was the apache bench program, or "ab" as it is called on most unix systems. All graphs seen on this page are created from data that ab produces. Here is a sample on how the raw data looks:

This is ApacheBench, Version 2.3 <$Revision: 655654 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking nsa.research.romab.com (be patient)

Server Software: nginx/1.0.2

Server Hostname: nsa.research.romab.com

Server Port: 443

SSL/TLS Protocol: TLSv1/SSLv3,RC4-SHA,2048,128

Document Path: /index.html

Document Length: 5496 bytes

Concurrency Level: 800

Time taken for tests: 11.310 seconds

Complete requests: 10000

Failed requests: 0

Write errors: 0

Keep-Alive requests: 10000

Total transferred: 57131424 bytes

HTML transferred: 54970992 bytes

Requests per second: 884.17 [#/sec] (mean)

Time per request: 904.801 [ms] (mean)

Time per request: 1.131 [ms] (mean, across all concurrent requests)

Transfer rate: 4933.01 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 353 1238.8 0 6437

Processing: 196 534 540.1 390 3240

Waiting: 196 534 540.1 390 3240

Total: 196 887 1676.8 390 6972

Percentage of the requests served within a certain time (ms)

50% 390

66% 390

75% 391

80% 392

90% 395

95% 6674

98% 6924

99% 6949

100% 6972 (longest request)